The A-Ruminations team discuss decisions, biology and all that it implies.

Category Archives: Decisions

Efficient Expediency?

Steve, Tobbe and I were discussing actions and the differences between working in efficient ways and working in expedient ways.

Efficient is a non-judgemental word and rather clinical – are we working in ways that reduce or eliminate the waste of resources, effort, time and money?

Expediency has a moral element – doing things in ways that are convenient regardless of whether they are morally the right things to do.

I did not notice my attitude to visual management changing – it struck me only recently. When I first started to work with cards on the wall, I thought it was about accountability. I would feel awful if I pointed to a card that I said I would finish yesterday and I still had not completed it today. Now I feel fine using cards on the wall to see how the work is flowing. It gives me ways to capture data and articulate where the workflow is being impacted. The focus is not on me and my accountability (there are plenty of other ways to look after that in the workplace) – the focus is on how we can make the system of work flow better.

Getting back to the words – expedient and efficient. Kanban-style visual management is a very efficient way to visualise the work in order to improve it, it allows us to see queues of work that are otherwise invisible. I have also heard horror stories of it being used to victimise people – it is an expedient tool in that respect. Someone could stand at the board, point at a card and interrogate the team about who is working on it, why it has not moved and yelling could happen. Unfortunately expediency also often includes efficiency.

Because I had not noticed the change in my attitude, I am careful to explain the difference when speaking to others who are new to visual management – it is likely that they may be feeling the accountability side just as I did at first.

OBSERVATIONS IN HOW THINGS GO 2

As a society based in the post Industrial revolution, where productivity and maximising profit rule the landscape and dictate our daily lives. We strive for “Efficiency” in our work lives and even our daily lives and many of us never really think about what we’re doing and the costs of our search for better, faster, more!

Efficiency vs Expediency.

Years ago I was developing a database, the demands placed upon the database were simplistic and basic. In essence it was a list, used to check the availability and validity of numbers. The idea was to wash a random bucket of numbers to see if they fit the criteria set by the customer. These numbers could have easily simply occupied a simplistic table format and been fine. The customer would have been happy and content yet, I found the basic minimal number of columns, data points inappropriate for the customers future needs.

Yes, I opted for Efficiency over Expediency. The reason was I have a scientific background and we never throw anything out, especially data. The data is only as good as your mindfulness and awareness while collecting it. Many a scientific study has suffered and been less important because basic and minor data was not collected during the experiment. In science I would never disregard any data which may be needed later. Anticipating future data needs and possible uses, is key to Efficiency.

So I was predisposed to put myself in the customers shoes and try and anticipate possible future data requirements. The upshot of this was that for very little extra effort, an extra few columns and a few extra lines of code; the customer could benefit from future data mining and analysis.

The effort required to develop the database was the same !

So why do we choose Expediency over actual Efficiency ?

The daily activity of trying to finish our work items steers us towards Rapid Solutions which seem Efficient; yet this very Expediency often costs many times more with rework, rebuilds and even the complete redevelopment when parameters shift as future needs become apparent.

The old saying “A stitch in time, saves nine” springs to mind. The mindfulness and mending of a small tear prevents the need for major reworking and effort.

So next time, ask yourself is this done for Expediency or actual Efficiency, and hopefully we can get a head of the curve and put our future efforts into better things than Rework.

The way I see this is that most of us try to do the best we can but a few remind me of the Ferreters of old who would let go any pregnant female rabbits so they would get work next year, to reduce the rabbit population.

Expediency has become our efficiency.

Activity vs Action.

In our modern world we are constantly expected to rush and frantically finish our tasks in the name of efficiency. Yet often we find that activity does not translate into the desired action or outcome. It is this “Rush to Action” which is the actual issue, often leading to poor outcomes and undesirable states of mind.

So why do we do it?

The basic fact is that we generally can not distinguish between activity and action. The realm of busywork is populated with people filled with a sense of accomplishment. The simple fact is we are driven by the evolutionary reflex of Fight or Flight. This predisposes us to rapidly respond by reaction, it is this proclivity to activity which makes us feel a sense of control when we are in activity. The difference between activity and action, is often only observed in the final outcome therefore it is very difficult for us and any onlooker to distinguish the two.

Doing things make us feel empowered and better; we feel in control and have a sense of fulfilment because we are doing something about it. Yet this activity is a hollow victory, if the end goals are not reached. The true reward is when we attain our objectives and goals.

If we take the real world example of Cave Diving, a highly technical and inherently dangerous activity, which happens to be fun and scary. Imagine you are diving in a sinkhole cave system and before you know it, you realise you’ve reached the bottom and have lost your bearings and the rest of your group. Initial instinct would be to begin searching for the others and this naturally becomes more frantic as time passes. Although this is a natural and understandable reaction, it usually is not the best course of action. This initial behaviour which manifests itself as activity, will often cause greater problems. The reason frantic activity in such a situation is not a good idea is that you will stir up any silt and mud off the bottom, this will muddy the water and reduce your visibility to the extent you can loose your orientation to the stage where you don’t even know which way is up.

Activity is not always the best action.

So in the above example reactionary activity is highly problematic but even in this example calmer heads and cool action will prevail. Surrounded by zero visibility, not knowing which way is up many would feel lost yet the fact that the bubbles you expire using SCUBA gear will always rise actually will help you identify which way is up. This simple fact and calm action can and will safe your life.

This predisposition to activity can and often does muddy the water when we are trying to determine the actions necessary to attain our goals. Running around like a headless chicken, is an apt description because we loose our senses in the frantic rush. We don’t see, don’t hear and can not clearly understand which are the best options, opportunities and course of actions.

Splitting, Refraction and Facets

Chatting with Steve and Tobbe – we were discussing refraction – light going into a prism and then splitting into a lovely rainbow. It is useful to slightly separate things and it can help us to clarify terminology.

A good example is the term Product Management – I was asked for a definition – so my first place of reference was ‘Escaping the Build Trap’ by Melissa Perri – with an excellent description of the role of a good product manager. This helped a lot – and when I looked into the situation a bit more, I realised that there was more to it. In conversations, not only did we start mixing together the terms Product Manager and Product Owner, but there was something else that was more than the role.

The landing point was to split it out into a few different facets; Profession, Role, Skills and Process – there could be other facets – these were the helpful ones for the recent conversations.

The Profession of Product Management grew from marketing and brand management and has evolved as organisations place more focus on Customer experience and the technology supporting that experience. In some places your career could take you through a range of product management roles all the way up to CPO – Chief Product Officer.

The Role of a Product Manager is to be able to articulate the ‘why’ of a product or feature so that the various teams can build the ‘what’. There is a lot more to it than this – but this is also where it is useful to split out the role from the skills and process facets.

The skills of Product Management need to be performed by many roles. There is the skill of stakeholder engagement to understand needs, goals and drivers. Being good at this skill means that the skill of prioritisation gets a bit easier. Then there are other skills such as data-driven decision making – all of us could use some of these skills in our work activities and we certainly should develop enough of these to be able to understand the outcomes we are aiming for (not just getting the bit of work done).

Product Management in Processes was a bit of a surprise when I noticed it. If we think about large organisations that allocate a certain percentage of time to innovation, this is an example of it. The organisation has prioritised innovation – allocated effort (which is time and therefore budget) with the outcome being to identify and develop new opportunities. The decision about the amount of time to dedicate and the level of priority is a product management decision that has been adopted as part of the regular planning and scheduling process. I’m certain that there are other aspects of product management that we could embed into processes and I will be looking for them.

I have previously blogged about the gradients of misinterpretation – those are still there, in this example there are gradients between one ‘end’ of the definition of Product Management and the other ‘end’. The point of this post is that is can be useful to put a definition through a ‘prism’ and split out some of its aspects or facets into discreet chunks.

“Need to Know Framework” derived from the PRISM

Trying to work out what you need to know and which information is most relevant at a particular time is like trying to hit a moving target. Not impossible but many factors play a role in the result. We all know that things change, yet we can fortify ourselves by becoming aware of which factors can impact us either directly or indirectly. Even factors out of our control can be planned for and risks mitigated.

The idea of a “Need to Know Framework” is actually an acknowledgement of how things used to be. The traditionally small groups of people working and existing together, organically gave rise to a sharing of information and knowledge, each person knew what the other person was doing and a network of awareness of the whole developed naturally. In the modern workplace the scale actually prevents the easy flow of information between indirectly but still connected groups. The recent ideas of tribes, regularly scheduled meeting and intranets etc. all are modern devices to try and replace the paradox of increasing isolation in larger groups.

So the only way forward is to begin at the level of the individual and instil a sense of “the Whole”. It is only when we appreciate the perspectives and requirements of others in the group that the greater good of “the Whole” moves forward. Some of the greatest advances in human history have only occurred when a discipline is examined from another perspective.

The need to understand the interconnections and dependancies that impact our tasks, is crucial to the understanding of the environment or “ecosystem” that our body of work will exist in over time.

It is essential for us to examine and determine which information and facts we will require. The resulting maturity that will develop, if we attain this perspective will be essential in helping us to reach our goals. The byproduct of striving to this end will be an increase in “Transparency” and a Need to Know Framework where each piece of information, can easily be prioritised into impact and according to our specific needs.

So how do can we get to this point?

Well it is only when we realise that just focusing on the immediate and specific task at hand, although seemingly efficient is actually detrimental to the ultimate whole. When any one thing is focused upon solely the result is always that the whole suffers. This does not mean that concentration on a particular task is bad but that any task no matter how small must and should be considered as part of a larger system. It doesn’t matter if your API is perfection, well coded, efficient and a masterpiece if it can not interact with its larger ecosystem.

So how do you generate a Need to Know Framework” ?

The inspiration for this comes from newtonian physics, in particular Refraction of light through a Prism.

Using the PRISM we can utilise the colours as indicators of the Importance, Directness and Impact of Information.

Centrally at Level 1 (RED) would be the direct and immediate information required for you to do the task, and the task only with little or no appreciation of where your task fits into the larger landscape. This is the equivalent of just in time management.

Next at Level 2 (ORANGE) is the knowledge required to perform your task and deliver it.

Level 3 (YELLOW) is information which helps you locate and position the task at hand, within a slightly broader framework. Such as knowing how it will impact and interact with its immediate neighbours or dependences.

Level 4 (GREEN) is information which is not usually considered required but when obtained frames and allows the project or task to comfortably sit within the broader landscape. This type of information aids in allowing you to grasp the interconnection between your task or project and those not directly involved, the first cousins to your work.

Level 5 (BLUE) this level of information frames you task or project in the broader environment, with awareness of interconnection and nuance between the up stream and down stream relationships.

Level 6 (INDIGO) this level like the colour is between BLUE and VIOLET, the interconnection and interdependencies of all the tasks and projects, yours included and the information required to distill the over arching “5 Year Plan” or Vision..

Level 7 (VIOLET) this is the highest level of separation from your work, and as such is not often seen as essential or even required. Yet, it is the guidance under which all your tasks and projects have been organised to function within. This is the “5 Year Plan” or Vision developed from the Level 6 (INDIGO) information when evaluated and seen in the light of the company in the Global Economic and Social Ecosystems.

Agile Governance

Or…How it is Difficult to Understand a Thing if you don’t Already Know the Key Thing

Governance is a word that throws me off – wondering what it really means.

It’s supposed to mean having clarified roles, accountability and responsibilities.

It means understanding how decisions get made and who makes them.

It means making sure that policies are in place and that processes are monitored to ensure that they are working as intended.

It’s tricky to explain governance in agile when people don’t already have a good grasp of governance in traditional organisational constructs.

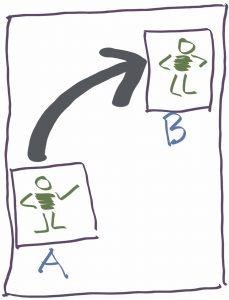

It’s similar to when I was a new video editor and our organisation bought a fancy piece of equipment called an ADO – Ampex Digital Optics (It flew a small picture around the screen and cost over $100k AUD in the 1980’s/90’s…now you can do the same thing with free software).

We did not purchase the training reference materials and only had the operating manual – which kept stating to do this, that, or the other with a thing called a ‘keyframe’.

I had no idea what this keyframe thing was – and the glossary was not helpful – so I stressed and tried a few things – and eventually called another video editor who was more experienced and asked him.

He explained that in any movement from point A to point B, the key frames would be the start and end point (so you basically put the small picture into the spot you wanted it to start at and press the ‘keyframe’ button and then move it to point B and hit ‘keyframe’ again – then the small picture would move from point A to point B whenever the effect was run).

Once I understood what a keyframe actually was, the whole machine was unlocked and I could use all of the features – but until I understood it, I could not even start to use this very expensive machine.

Back to governance and agile – when we understand how decisions get made – it’s quite easy to translate roles such as Product Owner into an organisation – the same decisions are being made – we are packaging them in a different way in agile teams.

When governance is not well understood, we end up circling around definitions for Product Owner etc – we are already causing confusion by introducing a completely different way of working. And on top of that, we need to break apart the decisions, accountabilities and responsibilities and place them into the daily planning, checking and doing aspects of agile delivery.

A way forward is to spend some time ensuring that the governance principles are better understood for the pre-agile ways of working and only after that, attempt to translate into the agile context. It feels like taking a step backwards – and it is much better than going around in circles and talking at cross-purposes.

If governance was never really there in the first place – this is one reason for the impression that governance is absent in agile.

Personal Philosophy 101: No Solutions, just Tools

Personal Philosophy 101: No Solutions, just Tools.

The thing that holds the thing that the Stakeholders were holding

So we all know about stakeholders…those people that care about what we are doing and should have a say in what happens.

So we all know about stakeholders…those people that care about what we are doing and should have a say in what happens.

Are there also people that care about the thing that the stakeholders care about that we would not call stakeholders?

What does the ‘stake’ mean when we refer to the stakeholder? According to Wikipedia, the term originally referred to the person who temporarily held the stakes from a wager until the outcome was determined. Business has since co-opted the term to mean people interested or impacted by the outcome of a project.

Tobbe and I were having burgers for lunch today…they were so tall that they had skewers in them to keep them together. So the chef held the skewer (stake) and we held the skewer when we ate the lunch, but the person serving us never touched the skewer…just the plate that supported the burger with the skewer in it.

That got us thinking about what supports the interests of the stakeholders, and yet, is not a stakeholder of a project or outcome?

It may be what we call governance. The scaffolding in an organisation that ensures that business interests are looked after…ensuring that our burgers arrive safely and without toppling over onto the floor.

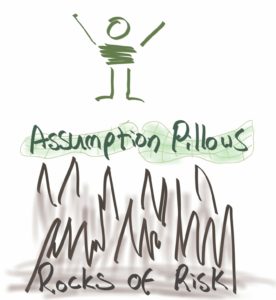

Assumption Pillows – Lightning Blog

Expanding the thoughts behind a recent tweet of mine.

‘Pondering….less obvious reasons…generate ‘pillows’ of assumptions…and are ineffective cushions against the sharp rocks of risk below’

Assumptions are like pillows – they are very comfortable and we are not aware of them for much of the time. The trouble is that our assumptions and the assumptions of other people that we are working with are likely to be different. When we don’t inspect our assumptions, we can overlook risks – so that is why our assumptions are like pillows.

It can feel like a waste of effort to inspect our assumptions – there are ways of working them into a workshop facilitation plan – that’s the easiest one to address.

Another way is to be aware of the feeling that something is not quite right in a conversation. Once I was negotiating a contract and almost starting to argue with the other party about a particular point – both of us thought that the other one was being a bit unreasonable. After a short break, we took a moment to clarify what we were discussing and it turned out that we both agreed the point, but had been discussing quite different things before the short break.

The main risk that assumptions cause in projects is delay. If we find ways to highlight assumptions earlier we can save wasted effort in circular discussions, rework or duplication.

It’s better to see the sharp rocks of risk than to cover them with assumption pillows.